AI‑assisted programming

Already have an account?

Continue with GitHub

Programming Re-imagined

What it is

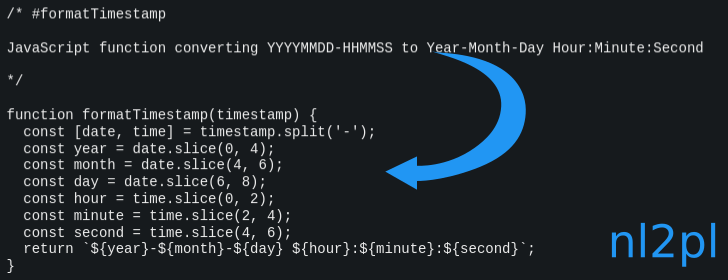

cmpr is an AI IDE where you write software in natural‑language (NL) blocks.

Our nl2pl compiler turns the specs you write into downstream programming language code, manages your build, and keeps everything up to date, reliably, as you make changes at the NL level.

Both NL and PL live together in ordinary code files, blocks are defined by ordinary comments and are transparent to the downstream build system that just sees ordinary files.

Meet PA (your programming assistant)

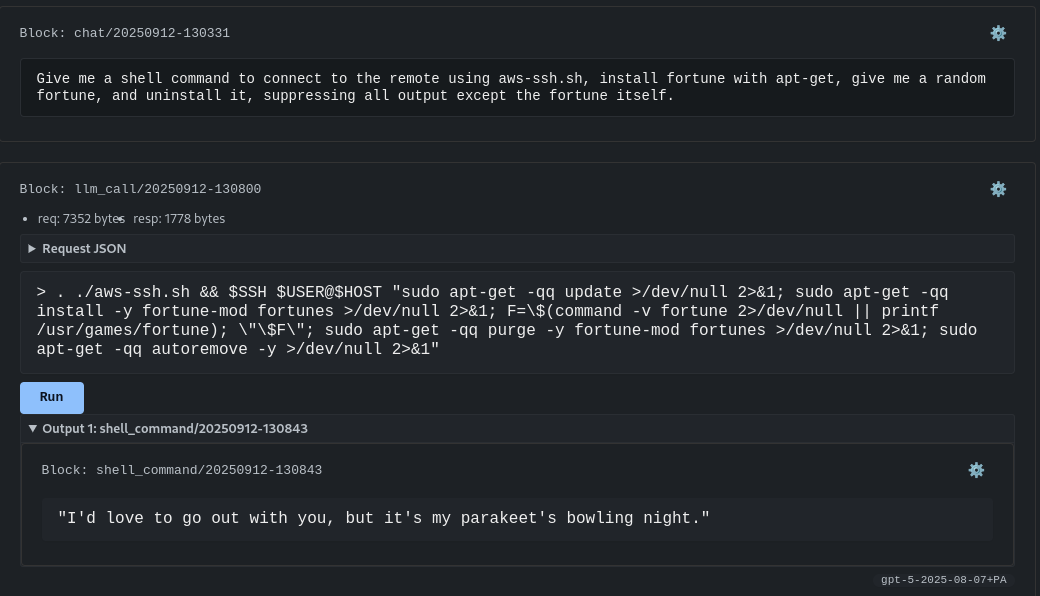

PA works with you at the NL layer and is expert in cmpr, nl2pl, git, Linux, build tools, and more.

PA composes the exact commands to build, test, integrate, and deploy, and manage the the complete development environment we provide.

You approve what runs.

Everything happens in your managed Linux server environment with full history.

- Chat‑first: keep typing; nothing interrupts your flow until you invite PA with llm.

- Exact steps: PA proposes commands for build, test, and deploy that you accept.

- Controlled: approvals on every action; no surprises in your codebase or server environment.

Example

You: “Bring github.com/me/app into the project.”

PA: “I’ll create a deploy key for you to add to your GitHub account, then we'll clone the repo, and blockize your codebase so we can work at the NL layer. Here are the steps....”

You: “Approve.” → shell commands and cmpr operations run in your dedicated environment.

PA proposes; you make the final say.

How you use it (and why we love it)

More than just a chat bot.

You decide when PA takes a turn: type

llm to let the system respond.

Between those turns, take notes, think out loud, run commands.

Use the remove and drop features to clean up the context, and edit anything with ed, including your own messages, blocks' NL or PL code, files, even LLM output.

This not only saves tokens, it avoids confusing the AI with stale information.

Once you have brought all the right context together into the chat, then invite the system in with llm and get the focused response you want.

When LLMs misunderstand, it can be hard to correct them without overcorrecting.

Instead, just drop back to before the misunderstanding, add further clarification or context, and let it try again.

- Start a chat with a goal ("Let's add a user stats endpoint.").

- You can even use your chat list as an informal tracker of works-in-progress, and check things off when done (use

namechatyourself, or ask PA to do it). - Keep typing until you reach a point where you have a focused task for PA to handle ("rewrite this block to ..."), or when you want information or options before making a decision ("suggest a few JavaScript libraries for rendering diffs in the browser").

- PA can edit, add, or remove NL blocks as appropriate; nl2pl manages the translation layer; you control architecture, choose where blocks live, and set and maintain project standards and direction.

- Accept updates; nl2pl compiles NL → code and keeps the project consistent.

Workflow

NL edits → Accept → nl2pl update flow → build/test/iterate → Ship

Get access (limited beta)

We’re pacing access to focus on early users and make the experience excellent.

If signups are paused, you’ll join a short waiting list and we’ll contact you via your GitHub email.

Two ways to get in

Option 1 — GitHub + subscription

Continue with GitHub, subscribe with Stripe, and we provision your dedicated development server.

Programmer — Beta pricing

For individual, full‑time programmers.

Sensible token and resource limits.

Pricing is beta‑only and may change for GA.

(requires GitHub login)

Option 2 — Invite (7‑day trial)

Get an invite from an existing user and try cmpr free for 7 days.

Flow: sign in with GitHub, enter your invite token, and your environment is provisioned.

Invites help us pace demand and keep environments reliable and safe.

After the trial expires, you'll be able to download a tarball of your files, or subscribe to keep building.

- Invites are limited; existing users share a small pool of tokens.

- If you need help with access, email hello@cmpr.ai.

Who it’s for

Built for professional programmers—especially AI skeptics who want tight control—yet welcoming for learners and students exploring Linux and programming.

The tech behind it

cmpr maintains an intelligent, self‑updating graph of your blocks and their history and relationships.

Our nl2pl compiler uses that graph to update the PL layer, run health checks across your blocks, and surface issues, which you can triage at your convenience, without interrupting you when you're focused.

The nl2pl subsystem has a lot of moving parts and is the result of extensive R&D starting in 2023 on the best ways to use generative AI effectively in large software projects; it's a lot more than just a wrapper over GPT.

Planned soon: Pro plan and Enterprise options.

For sales or larger teams, use /contact or email hello@cmpr.ai.

Roadmap

What’s next for cmpr.ai

🧪

cmpr 1 PoC

Established feasibility of robust code generation via LLM on complex software projects.

The key: providing the right context to the model for each block of code.

The solution: cmpr's blockrefs and block graph.

cmpr 1 is an open-source terminal application that validated the block database idea, with manual control over blockrefs by the programmer.

🛠️

cmpr.ai (cmpr 2)

Bringing beautiful UI/UX to the cmpr workflow, and introducing PA to help tame the complexity of cmpr 1.

Born on Apr 15, 2025 and went into private beta in late August, we believe this is the best way to write code with AI assistance today, and for greenfield work is the best way to program, period.

👩🏻💻

Programmer plan

Designed for the individual, full‑time programmer.

Semi-open signups while we remain in beta.

🌐

General Availability

New accounts planned as invite‑only to maintain quality and responsiveness.

🧠

Import and understand existing codebases

Powerful tools to ingest, understand, and derive a navigable block graph from an entire existing codebase, generating NL code that recreates your existing PL codebase, fully automatically.

Targeted at up to Linux‑kernel-scale repositories, this will be the intended workflow for bringing cmpr to established projects and legacy codebases alike.

👥

Pro / Team accounts

Team features will kick in when a second user is added to an existing account, and will include shared projects, chats, history, light messaging features.

🏛️

Enterprise options

SSO, compliance, enterprise integrations, on‑prem deploys and local model options.

Some capabilities planned mid 2026.